Introduction to VAME#

VAME is a framework to cluster behavioral signals obtained from pose-estimation tools.

Documentation: LINCellularNeuroscience/VAME

Sourcecode: LINCellularNeuroscience/VAME

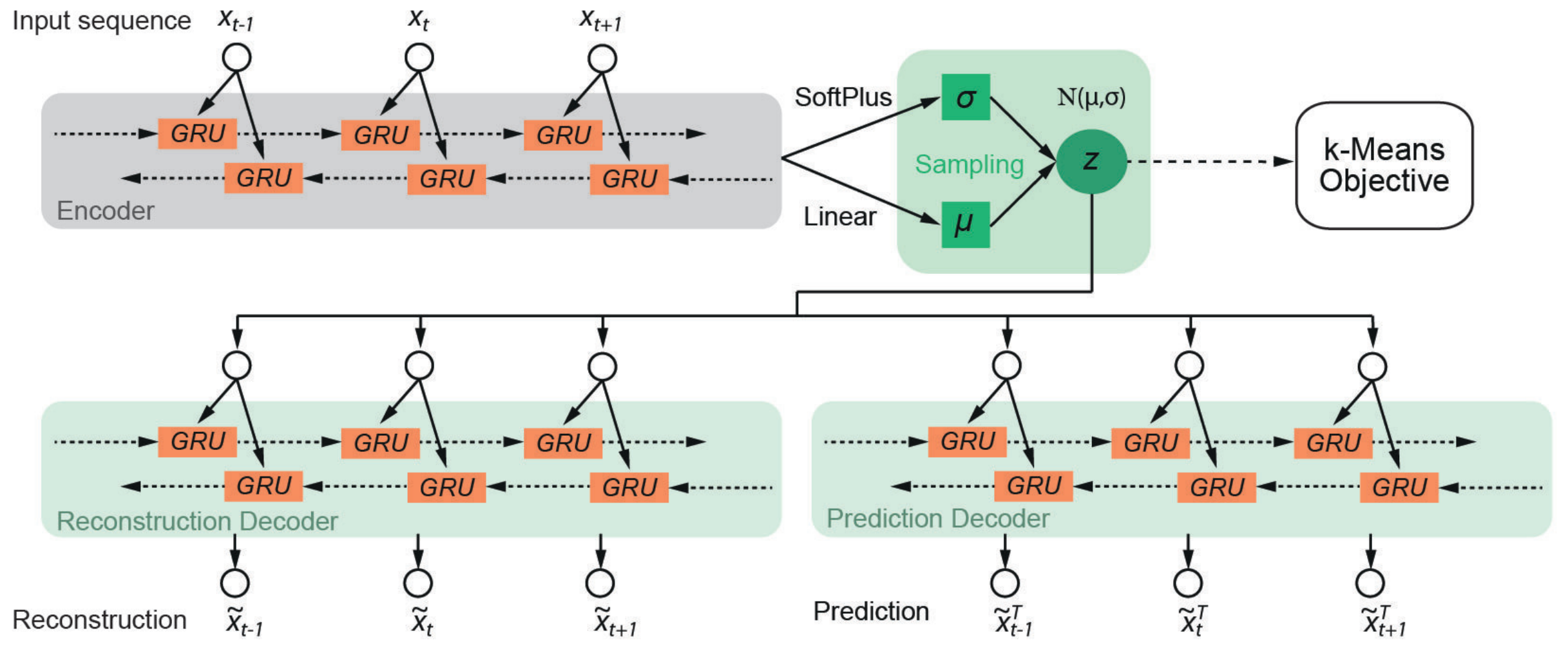

Variational Animal Motion Embedding (VAME) is a probabilistic machine learning framework developed by Luxem et al., 2022 for discovery of the latent structure of animal behavior given an input time series obtained from markerless pose estimation tools. It is a PyTorch based deep learning framework which leverages the power of recurrent neural networks (RNN) to model sequential data. In order to learn the underlying complex data distribution we use the RNN in a variational autoencoder setting to extract the latent state of the animal in every step of the input time series.

Fig. 23 Architecture of machine learning model in VAME, from Luxem et al., 2020.#

Installation#

Unfortunately, you will need a GPU to install and run VAME locally on your computer. If that is the case, and you have Anaconda already installed, follow the steps below:

git clone https://github.com/LINCellularNeuroscience/VAME.gitcd VAMEconda env create -f VAME.yamlpython setup.py installinstall PyTorch from here: https://pytorch.org/get-started/locally/

If you have no access to a local GPU, you can always use cloud computing solutions (e.g., Google Colab) to run a test project on a virtual GPU. See Part 2 of todays tutorial to run VAME on Colab.

Documentation#

Luxem, K., Mocellin, P., Fuhrmann, F., Kürsch, J., Remy, S., & Bauer, P. (2022). Identifying Behavioral Structure from Deep Variational Embeddings of Animal Motion. https://doi.org/10.1101/2020.05.14.095430